Interpretable Maximal Discrepancies Metrics for Analyzing and Improving Generative Models

Office of Naval Research, Grant # N00014-21-1-2300, Principal Investigator: Austin J. Brockmeier, 4/2021–4/2024.

Overview

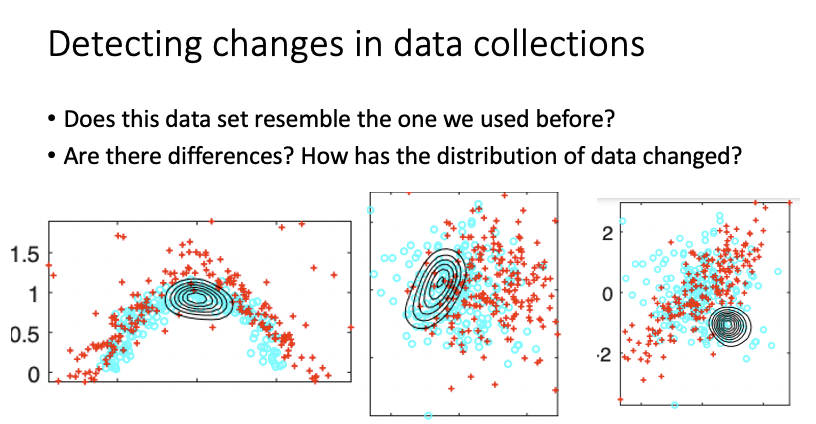

Divergence measures quantify the dissimilarity, including the distance, between distributions and are fundamental to hypothesis testing, information theory, and the estimation and criticism of statistical models. Recently, there has been renewed interest in divergences in the context of generative adversarial neural networks (GANs). While a multitude of divergences exist, they vary in their characteristics. Importantly, not all divergences are equally interpretable: a divergence between samples is considered interpretable if it directly answers the question “Which instances best exhibit the discrepancy between the samples?”

Outcomes

- Y. Karahan, B. Riaz, and A. J. Brockmeier “Kernel landmarks: An empirical statistical approach to detect covariate shift”, Workshop on Distribution Shifts, 35th Conference on Neural Information Processing Systems (NeurIPS 2021) Paper, Poster, 5 min Presentation, ECE Research Day Poster–Winner in SPCC Group

- A. J. Brockmeier, C. C. Claros Olivares, M. S. Emigh, and L. G. Sanchez Giraldo “Identifying the instances associated with distribution shifts using the max-sliced Bures divergence”, Workshop on Distribution Shifts, 35th Conference on Neural Information Processing Systems (NeurIPS 2021) Paper, Poster, 5 min Presentation.

Acknowledgements

These research efforts are sponsored by the Department of the Navy, Office of Naval Research under ONR award number N00014-21-1-2300. Gratefully acknowledge the support of Dr. Tory Cobb and ONR321. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the Office of Naval Research.