Improving Reference Prioritisation With PICO Recognition

Austin J. Brockmeier, Meizhi Ju, Piotr Przybyła, Sophia Ananiadou

BMC Medical Informatics and Decision Making,

Vol. 19,

No. 1,

pp. 1–14,

2019

Overview

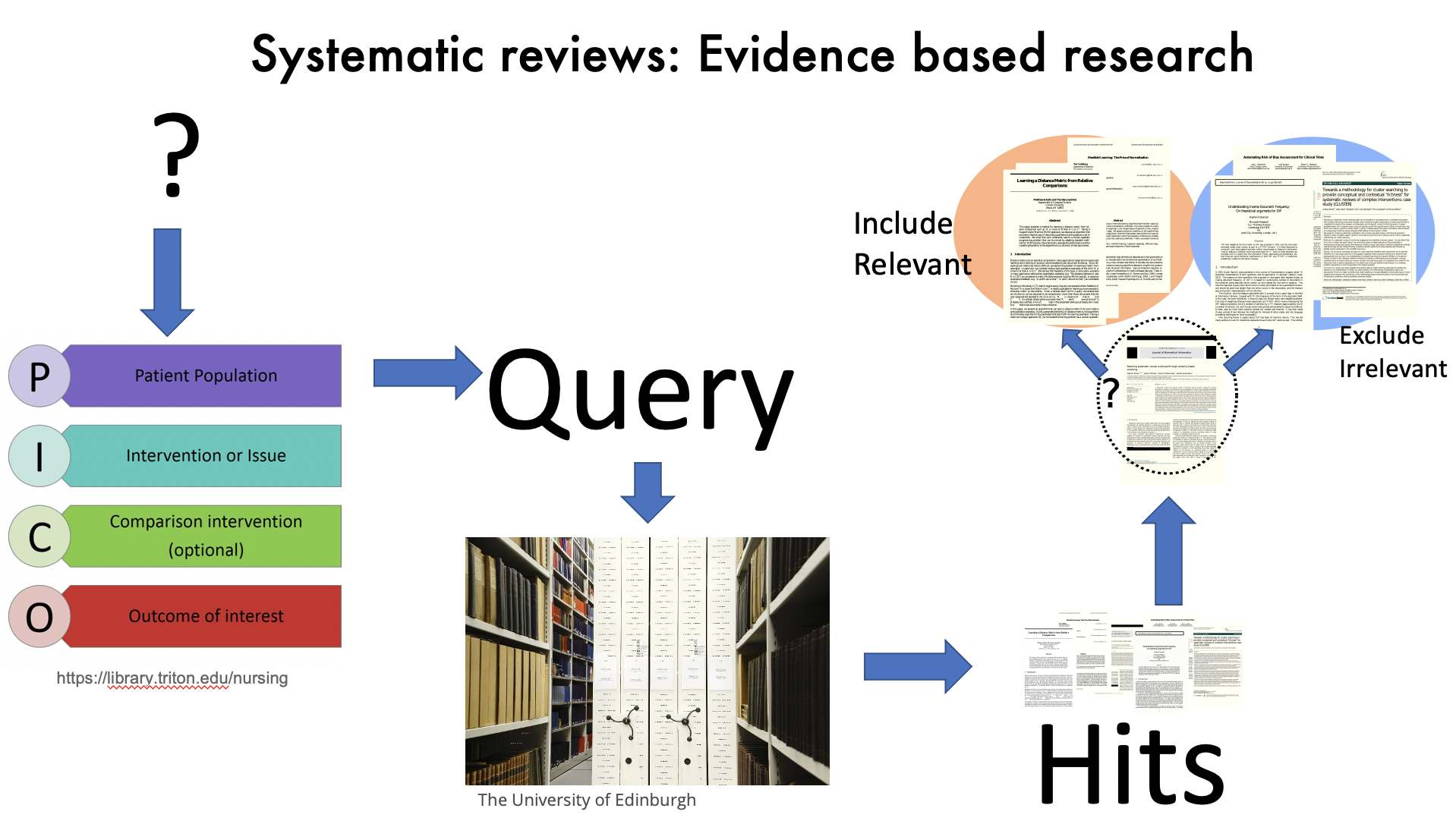

Machine learning can assist with multiple tasks during systematic reviews to facilitate the rapid retrieval of relevant references during screening and to identify and extract information relevant to the study characteristics, which include the PICO elements of patient/population, intervention, comparator, and outcomes.

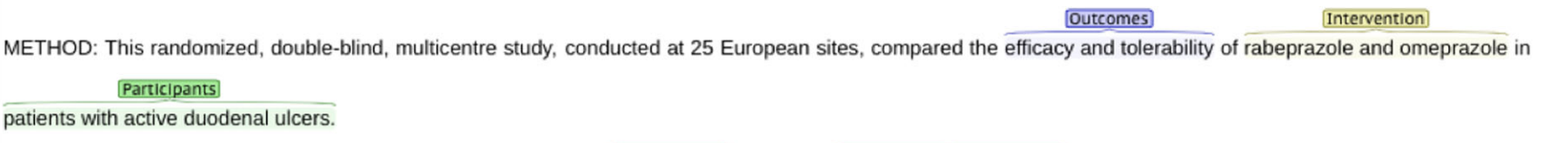

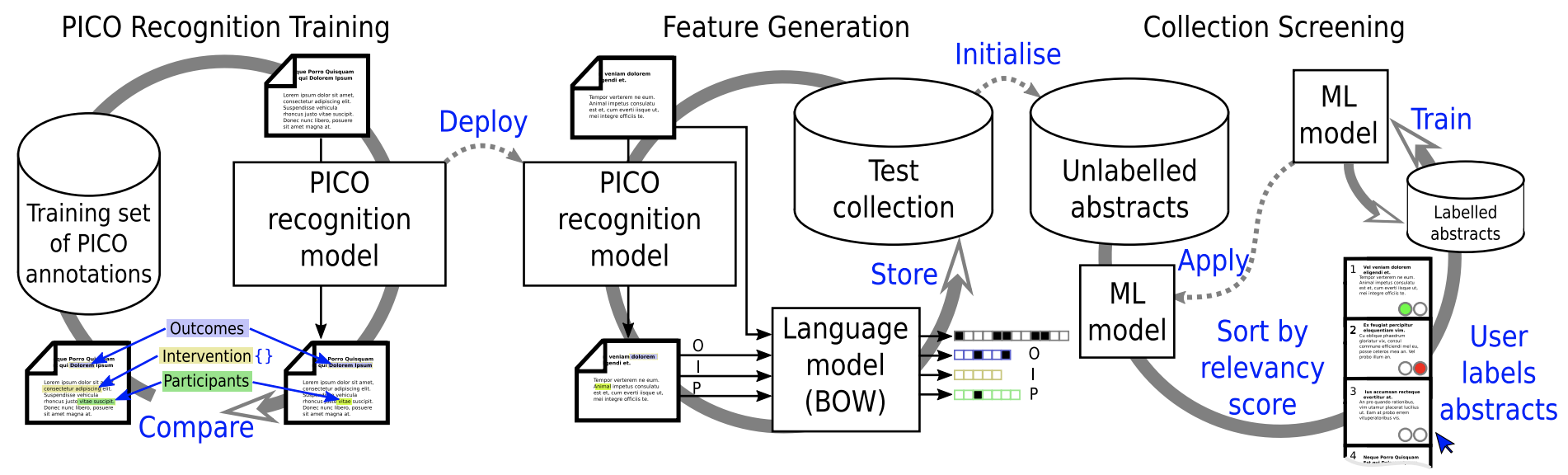

A publicly available corpus of PICO annotations on biomedical abstracts is used to train a named entity recognition model, which is implemented as a recurrent neural network. This model is then applied to a separate collection of abstracts for references from systematic reviews within biomedical and health domains. The occurrences of words tagged in the context of specific PICO contexts are used as additional features for a relevancy classification model. Simulations of the machine learning-assisted screening are used to evaluate the work saved by the relevancy model with and without the PICO features.

Chi-squared and statistical significance of positive predicted values are used to identify words that are more indicative of relevancy within PICO contexts. Inclusion of PICO features improves the performance metric on 15 of the 20 collections, with substantial gains on certain systematic reviews. Examples of words whose PICO context are more precise can explain this increase. Words within PICO tagged segments in abstracts are predictive features for determining inclusion. Combining PICO annotation model into the relevancy classification pipeline is a promising approach.