The ability to produce dynamic Depth of Field effects in live video streams was until recently a quality unique to movie cameras.

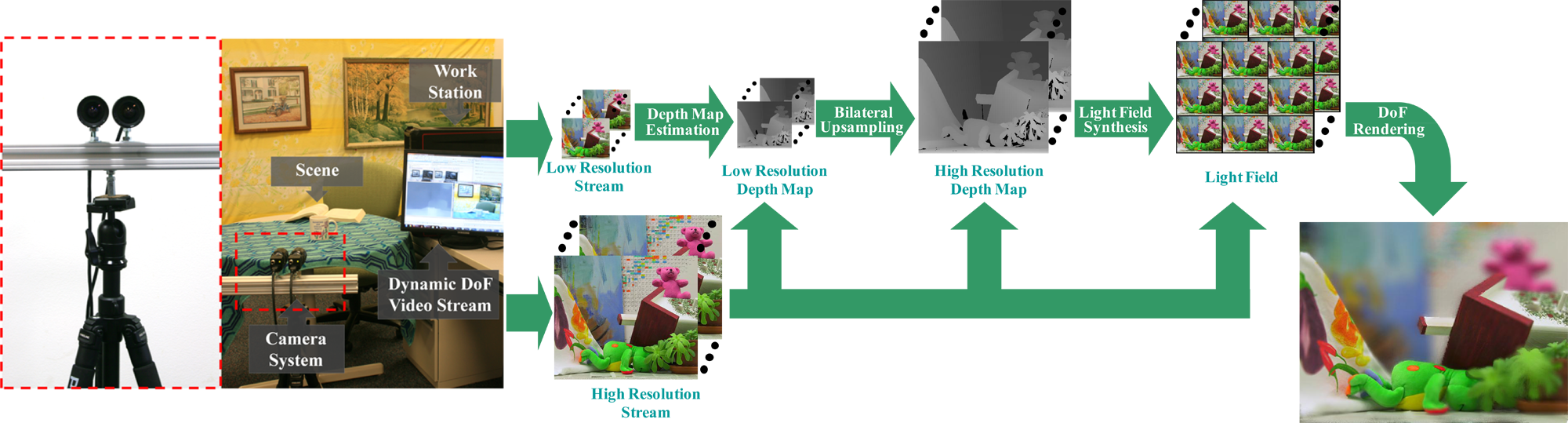

In this paper, we present a computational camera solution coupled with real-time GPU processing to produce runtime

dynamic Depth of Field effects. We first construct a hybrid-resolution stereo camera with a high-res/low-res camera pair.

We recover a low-res disparity map of the scene using GPU-based Belief Propagation and subsequently upsample it

via fast Cross/Joint Bilateral Upsampling. With the recovered high-resolution disparity map, we warp the high-resolution

video stream to nearby viewpoints to synthesize a light field towards the scene. We exploit parallel processing and atomic

operations on the GPU to resolve visibility when multiple pixels warp to the same image location.

We begin with the capture of a stereo image pair, one color and one gray scale.

We first downsample the high resolution color image and convert it to grayscale. And recover a low resolution disparity map on the GPU using belief propagation.

We subsequently upsample the disparity map to full resolution by applying the fast cross bilateral filter.

Finally, we combine the upsampled disparity map and the high resolution input image to generate a light field, and synthesize dynamic Depth of field effects by a light field rendering.

To first generate a low-resolution disparity map, we implement a GPU based stereo matching algorithm on CUDA.Our solution is based on BP, which lends itself well to parallelism on GPU.

We use a hierarchical implementation to decrease the number of iterations needed for message value convergence.

We then apply a checkerboard scheme to split the pixels when passing messages in order to reduce the number of necessary operations and halve the memory requirements.

We also utilize a two-pass algorithm to reduce the running time to generate each message using the truncated linear model for data and smoothness costs.

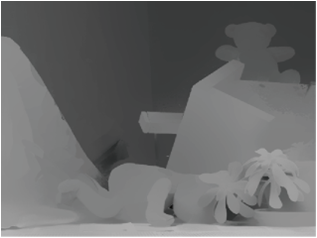

We first apply a Gaussian High-pass filter to identify edges and texture pixels. We then use standard CBF to estimate disparity values of these pixels.

In parallel, we downsample the color image to mid-resolution, apply CBU on the low res disparity map and mid res color image to achieve a mid res Disparity map, and subsequently upsample the Disparity map to high resolution using standard bilinear upsampling.

Finally, we perform high frequency compensation by using the edge disparity map. Compared with standard CBU, our scheme only needs to upsample a small portion of the pixels and hence is much faster.

|

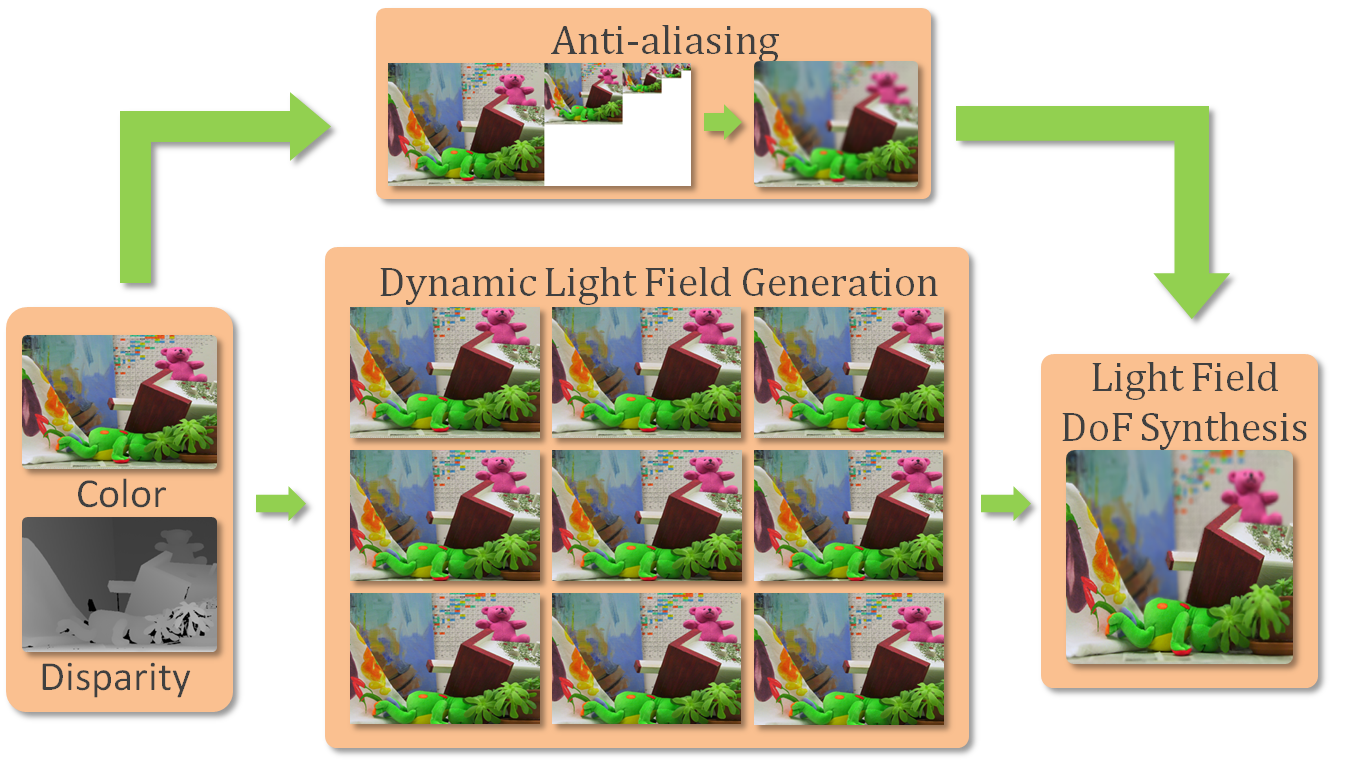

Here we show our DoF synthesis pipeline

1.From a single rasterized view and its Disparity map, we dynamically generate a light field towards the scene.

2.We then synthesize Depth of Field effects from the light field.

3.Finally, to reduce aliasing artifacts, we use an image-space filtering technique to compensate spatial under-sampling.

[PDF(11.1MB)] [Video(65.1MB)]