University of Delaware

|

Abstract

There is an emerging interest on using high-dimensional datasets beyond 2D images in saliency detection. Examples include 3D data based on stereo matching and Kinect sensors and more recently 4D light field data. However, these techniques adopt very different solution frameworks, in both type of features and procedures on using them. In this paper, we present an unified saliency detection framework for handling heterogenous types of input data. Our approach builds dictionaries using data-specific features. Specifically, we first select a group of potential foreground superpixels to build a primitive saliency dictionary. We then prune the outliers in the dictionary and test on the remaining superpixels to iteratively refine the dictionary. Comprehensive experiments show that our approach universally outperforms the state-of-the-art solution on all 2D, 3D and 4D data.

Publications

Nianyi Li, Bilin Sun, Jingyi Yu. A Weighted Sparse Coding Framework for Saliency Detection. CVPR 2015. [pdf][Bibtex]

Results

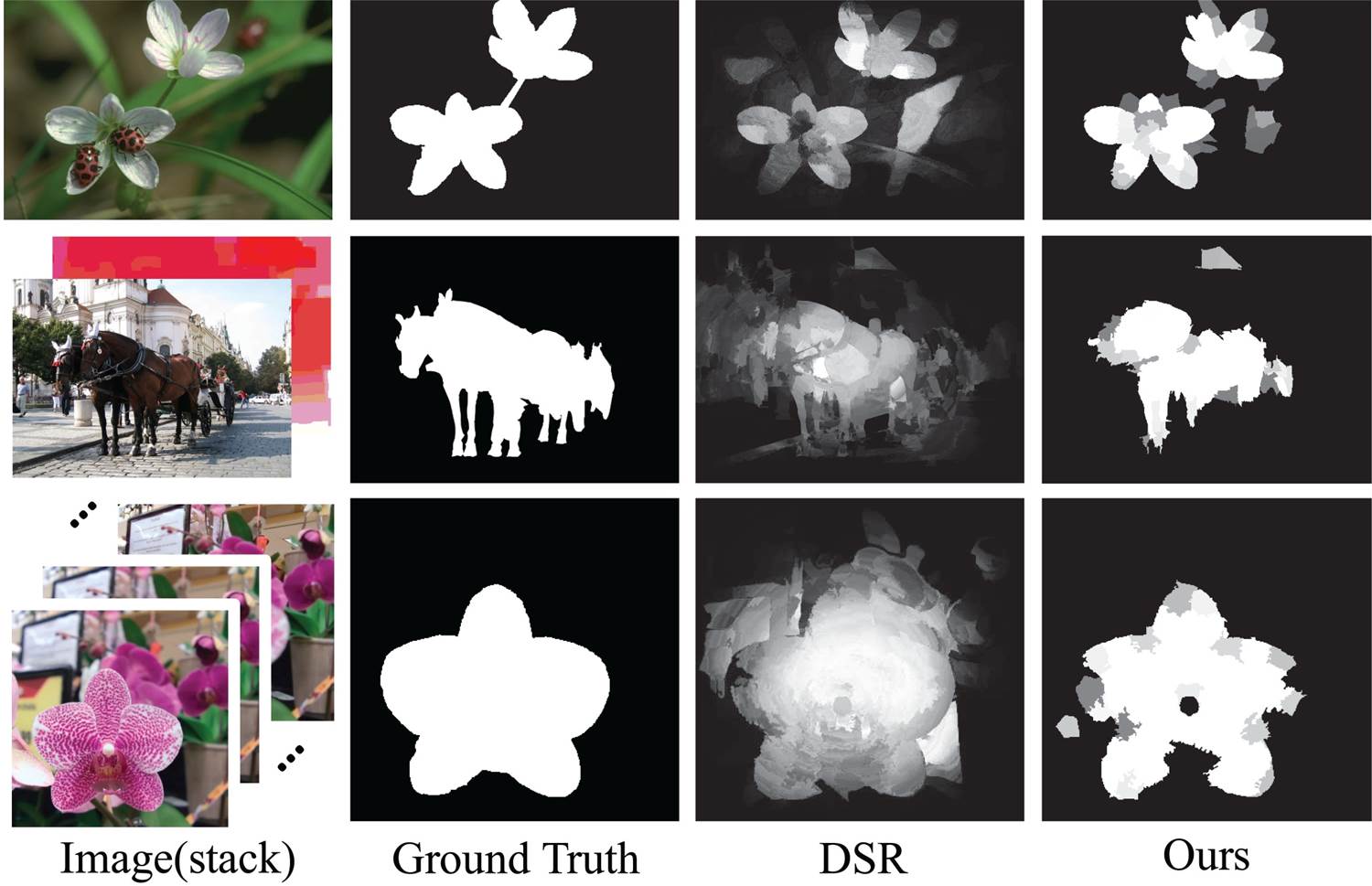

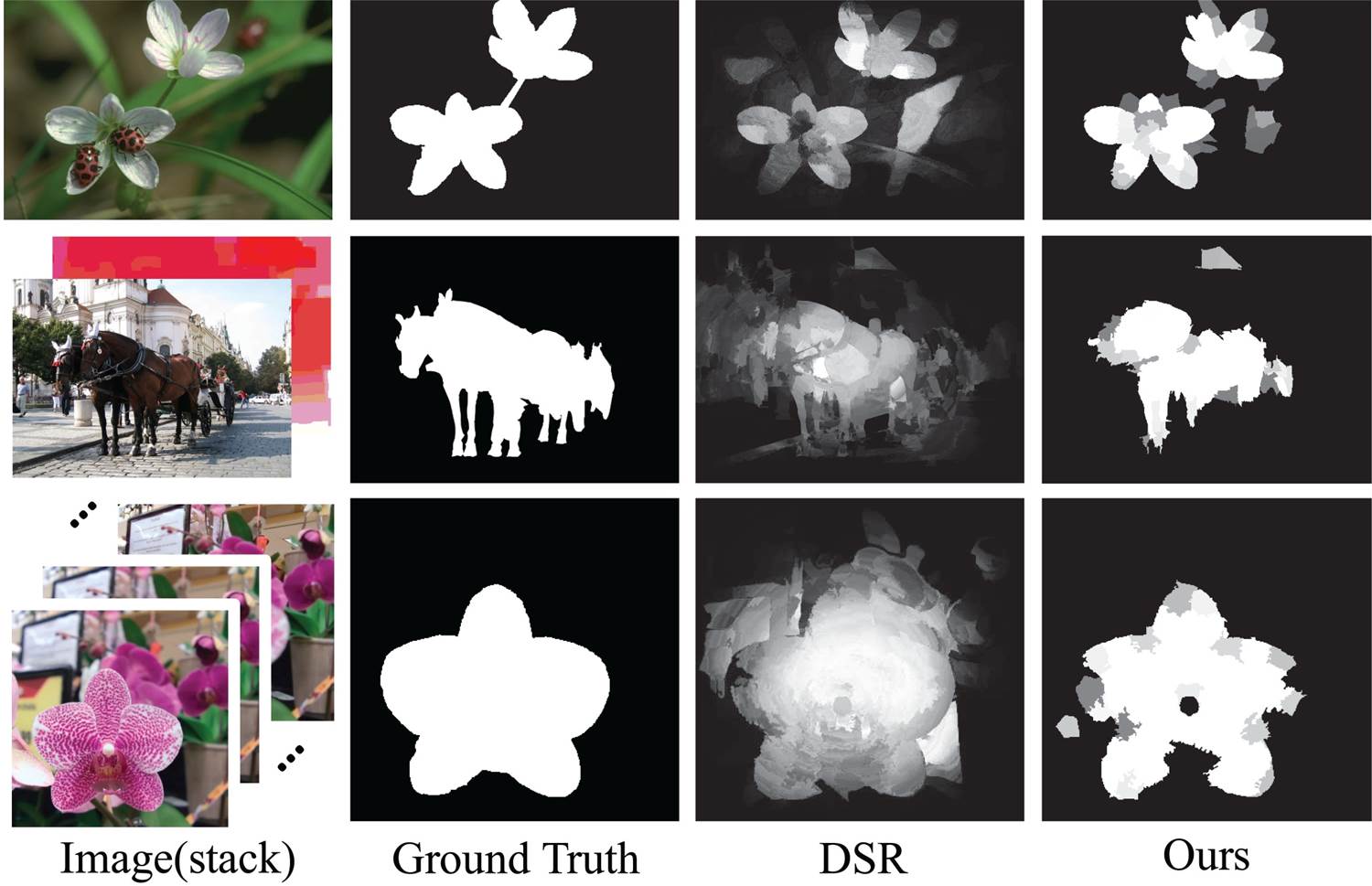

For each example below, we show results by our model and the ground-truth on:

2D (MSRA-1000,

SOD), 3D (SSB),

4D (LFSD).

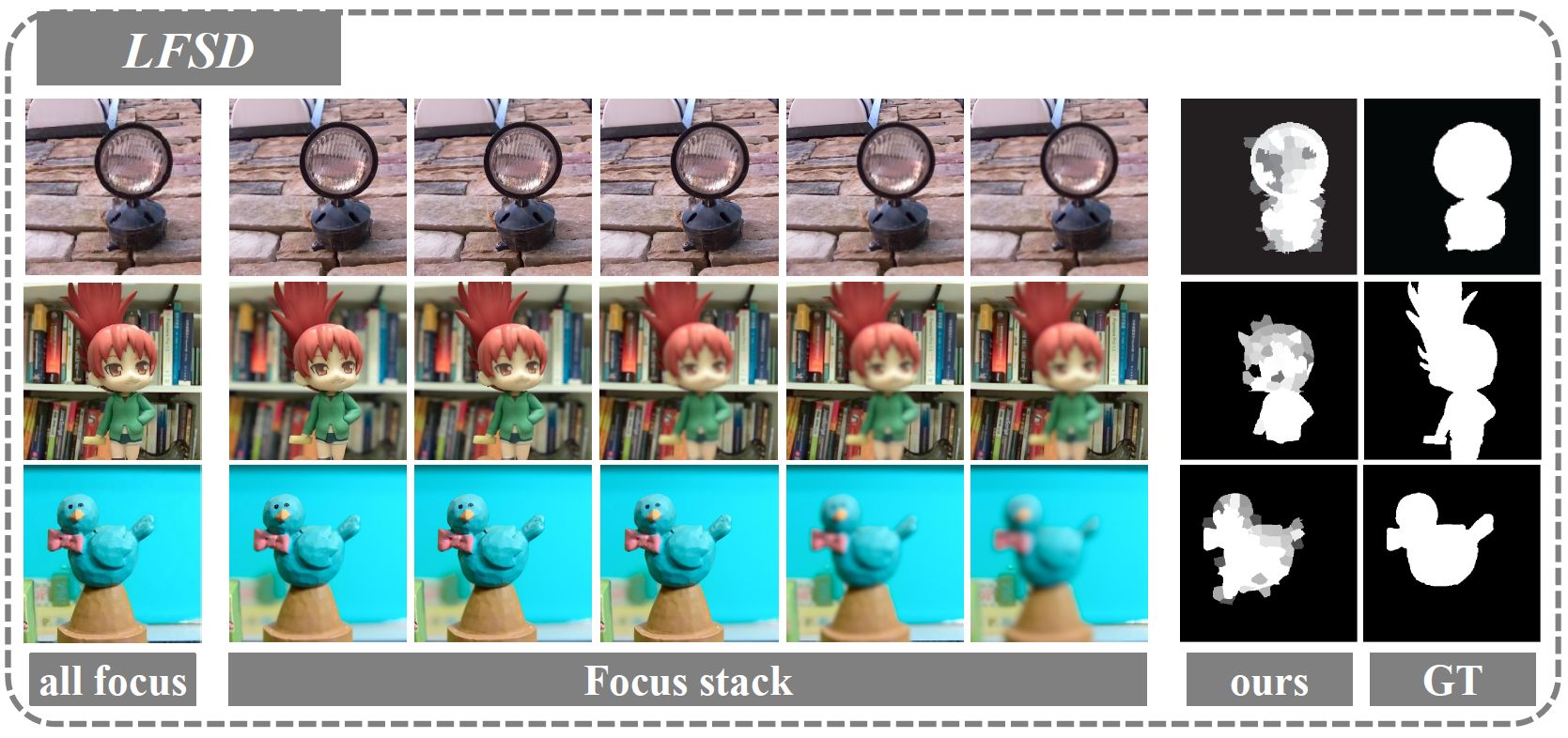

Quantitative Comparison

Precision-Recall comparison with state-of-the-art methods. For details on the abbreviations of methods please refer to our paper.

Downloads

We provide our saliency results on the following datasets. For saliency maps of other methods mentioned in our paper, please contact nianyi AT uel dot edu.

|

Results |

|

|

Code

|

|