Scene understanding

People: Gowri Somanath, Rohith MV and Chandra Kambhamettu

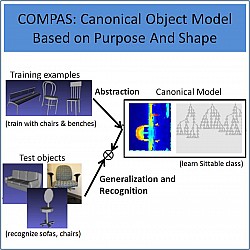

Topics: Scene segmentation, Object discovery, Object recognition, Function-based object classification, 3D shape abstraction and generalization.

Questions this work seeks to allow a machine to answer:

- What objects do I see in this scene?

- Which objects do I already know?

- Can I update my knowledge about the objects I knew and learn new ones?

- Is this an object I can sit on?

- Which surface of this object is useful for sitting? Where can I place things on, on this object?

- I had only seen chairs before, but I can recognize that a sofa is also Sittable. Can I add that to my canonical model of sittable class?

- Where am I? Is this a kitchen or living room?

- Where am I? Which place is it?

|

Intelligent robots that interact and blend in with humans have been one of the core goals of AI. One of the important sensors on such a robot is its vision system. Humans are known to derive maximal sensory feedback and experience from what they see. To enable a robot capable of imitating a fraction of the interaction and derived knowledge is a challenging task. It has become clear that the task involves devising algorithms that allow for adaptive and incremental instance based learning. We study some of the basic problems in designing such vision systems. The three questions we seek to answer are as follows. Scene segmentation: How do we enable the vision system to segment a scene into constituent objects? When humans observe a scene, the most trivial task is to identify the di erent objects present. For example, given a mug kept on a table, identifying that there are two objects present and realizing which parts belong to each of the objects is a seemingly easy task. But to achieve this capability in a machine is not trivial. Taking inspiration from human vision and cognitive studies, we have developed algorithms to perform the task of scene segmentation. Since perfect segmentation is not possible for any given scene, we allow for learning from past experience and applying the same for a future case. Once the objects have been segmented, the next challenge is to remember them in a way efficient for fast recollection. This is the second challenge we address: Organization and recognition of objects. We have developed a framework to organize objects such that recognition of any particular object involves search in a reduced search space rather than all the known objects. Lastly, we are address the question of generalizing object examples into classes based on their functionality and use by humans. For example, though there are many diff erent styles and sizes of chairs, conceptually a seat with backrest de fines a basic chair. For all operational purposes, an intelligent robot only needs to be able to recognize or identify a chair, when need arises. Clearly, a fi xed set of manually derived rules does not lead to a scalable solution. Also, it is not necessary that optimal for human description match that for a machine. Hence, we propose techniques that allow the system to derive rules for each class based on the examples it encounters. A scheme is developed to help arrive at the rules. The rules derived are themselves dependent on the robot's exposure and environment. Though the rules are tested on the geometry/ shapes of objects, the classi cation is obtained based on human action on the object. For example, the objects on which humans mostly sit belong to the virtual class of 'chairs'. Similarly, what humans 'sleep' on is a 'bed'.

Related publications

- Gowri Somanath, Rohith MV, Dmitris Metaxas, Chandra Kambhamettu, D - Clutter: Building object model library from unsupervised segmentation of cluttered scenes, IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2009, pp: 2783 - 2789. (Acceptance rate 22.1%) Poster (jpg)

- Gowri Somanath, Chandra Kambhamettu, Abstraction and Generalization of 3D structure for recognition in large intra-class variation, The 10th Asian Conference on Computer Vision (ACCV), R. Kimmel, R. Klette, and A. Sugimoto (Eds.): ACCV 2010, Part III, LNCS 6494, pp. 483–496, Springer-Verlag Berlin Heidelberg 2011.

- Gowri Somanath, Chandra Kambhamettu, Arrangement based image representation for scene recognition, International Conference on Pattern Recognition (ICPR) 2012.